02. Embeddings Intro

Hi! This is Mat.

Word Embeddings

Recall that an embedding is a transformation for representing data with a huge number of classes more efficiently. Embeddings greatly improve the ability of networks to learn from data of this sort by representing the data with lower dimensional vectors.

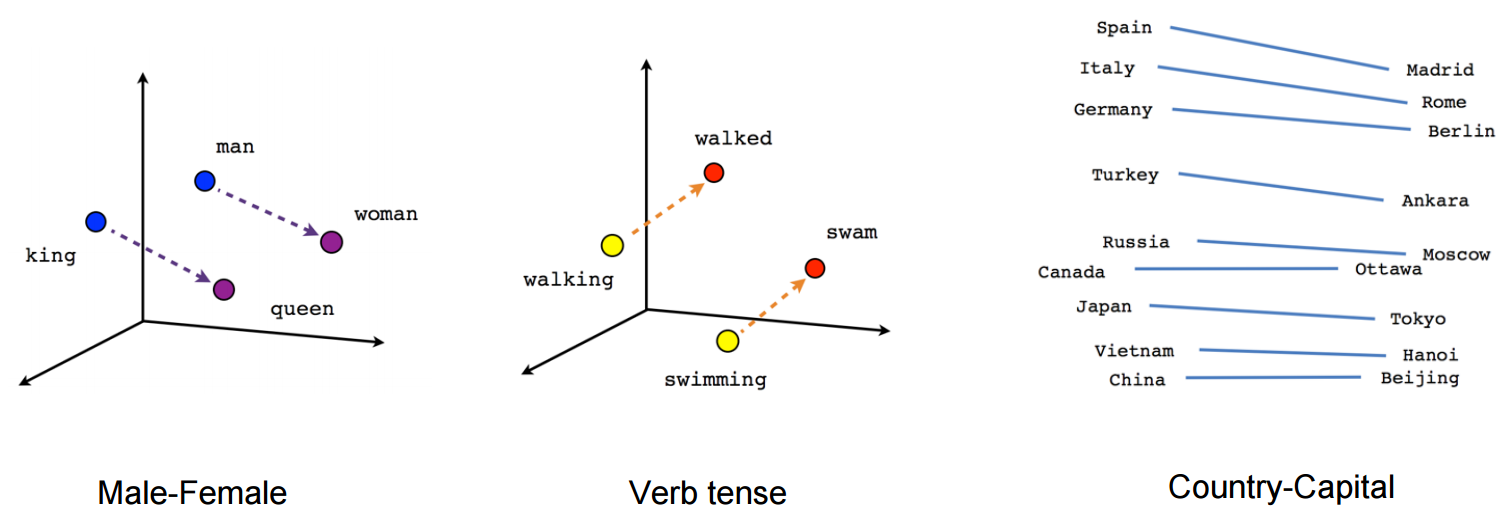

Word embeddings in particular are interesting because the networks are able to learn semantic relationships between words. For example, the embeddings will know that the male equivalent of a queen is a king.

Word embeddings can learn to capture linear relationships

These word embeddings are learned using a model such as Word2Vec. In this lesson, you'll implement Word2Vec yourself.

We've built a notebook with exercises and also provided our solutions. You can find the notebooks in our GitHub repo in the embeddings folder.

Requirements: You'll need Numpy, Matplotlib, Scikit-learn, tqdm, and TensorFlow 1.0 to run this code.

Next up, I'll walk you through implementing the Word2Vec model.